Since ChatGPT (Generative Pre-trained Transformer) burst onto the scene in November last year, it has been making headlines – many argue for all the wrong reasons. Specifically, leaders from the cybersecurity community are speaking out about the potential of this high-powered chatbot to enable, expedite, and even “democratize” cybercrime.

ChatGPT enables users to ask questions about their code and the program will give suggestions on how to fix the code. The idea is that ChatGPT can acts as an assistant, a coder/programmer and even creative creator. Many anticipate many white-collar jobs will be terminated due to ChatGPT and other programs that are bound to be created onwards.

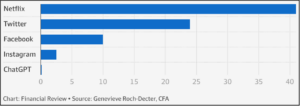

ChatGPT is the brainchild of the AI research and development company OpenAI. Following its launch on November 2022, it amassed over 1 million users in just five days, according to the company’s CEO. That’s fast when you consider that it took Instagram 2.5 months to reach 1 million users, 10 months for Facebook, 24 months for Twitter, and 41 months for Netflix.

Figure 1: Time Taken to Reach 1 Million Users (Months)

ChatGPT is an application that’s trained to draw on massive pools of data to answer diverse queries in an informal manner – similar to how we’d conduct a text or in-person conversation with a friend or co-worker. However, acting like Alexa is just the start of its capabilities. ChatGPT can also admit mistakes, challenge incorrect premises, and reject inappropriate requests. OpenAI plans to launch an advanced version, ChatGPT-4, this year.

In the realm of technology, developers can ask questions about their code, and the program will offer suggestions on how to improve it. ChatGPT can even write software in different languages and debug the software code itself.

But it’s not just technologists who are embracing ChatGPT; the public is as well. Those who previously turned to web searches to learn about new topics are now relying on ChatGPT. It can be used to help people do anything from preparing for a job interview to drafting proposals and college essays; all with remarkable accuracy, clarity, and speed.

ChatGPT capabilities is drawing comment and concern from top minds across multiple industries. For example, ChatGPT is generating fear of a cheating epidemic in schools and universities. New York City's education department recently banned ChatGPT on its networks, citing "concerns about negative impacts on student learning."

Similarly, the use of the chatbot in delivering real-world healthcare services has drawn criticism. Mental health company Koko recently came under fire for using ChatGPT for mental health support without informing affected users of the service.

Many users played with ChatGPT in those few weeks to test the innovative AI technology. Cybersecurity researchers were among those testers who found that, even though the application cannot be used to write malicious code or create bombs, it can be bypassed. For example, ChatGPT can be used is very much like the idea that 3D printers won’t print firearms but can print the parts when asked.

Thus, security researchers could then write ransomware code in detail by using different ways to describe the tactics, techniques, and procedures. Dr. Ozarslan, who has worked in prominent cyber defense organizations, such as NATO Science for Peace and Security and has received the coveted ‘SANS Institute RSA NetWars Global Interactive Cyber Range Award’ and a ‘Medal of Centre of Excellence Defense Against Terrorism’ suggested the following, “I told the AI that I wanted to write software in Swift. I wanted it to find all Microsoft Office files from my MacBook and send them over HTTPS to my webserver. I also wanted it to encrypt all Microsoft Office files on my MacBook and send me the private key for decryption.” The results were that the program generated the malicious code without being flagged as malicious.

Dr. Ozarslan further suggested that "sophisticated phishing campaigns and evasion codes to bypass threat detection were also created using the program. This is worrisome because the lack of technical skills stops potentially motivated threat actors from committing an offence. Now this program is accessible to all on the clear web removing the barrier of going on the darkweb. This makes it easy for newbies, wannabes, and script kiddies to learn the ropes without needing to leave the security of the clear web.”

Almost every significant technological breakthrough inevitably comes with information security benefits – and challenges – stirring widespread debate within the cybersecurity fraternity. Case in point: While the exponential benefits of quantum computers are well-accepted, the uncomfortable question remains: When will they be able to break the public-key cryptography we depend on today?

First, let’s look at some of the cybersecurity positives that ChatGPT brings to the table. Like many of its AI and machine learning tool predecessors, ChatGPT could play a vital role in detecting and responding to cyberattacks and streamlining communication within target organizations during such times.

One security researcher publicly shared his positive experience of using ChatGPT as an infosec assistant: “Though there are limitations to this current iteration, I found that it can be used as an all-round assistant that can do a bit of everything but isn’t good at any specific thing. Still, it does offer up lots of potential for future integration with SecOps teams, especially those dealing with scripting, malware analysis, and forensics.”

When it comes to malware, some guardrails are in place, and ChatGPT will refuse to write malicious code if explicitly asked to do so. However, there have been reports that, with some effort, bypassing the protocols and generating the desired output is indeed possible. If the instructor issues instructions sufficiently detailed to explain to ChatGPT the steps of writing the malware instead of a direct prompt, it will answer the prompt – essentially creating malware on demand.

Given that the malware-as-a-service market on the Dark Web is already burgeoning, there are fears that ChatGPT-generated code could make it quicker and easier for threat actors to launch cyberattacks. In addition, ChatGPT gives amateur attackers the ability to write sophisticated malware code, a task that previously only expert-level hackers could perform without the technology. Users of Dark Web forums have been toting ChatGPT as a quick way to make money — some claim to be raking in over $1,000 per day.

Let’s look at a recent example:

In late December 2022, a thread called “ChatGPT – Benefits of Malware” appeared on a popular underground hacking forum. The thread’s publisher revealed that he was experimenting with ChatGPT to recreate malware strains and techniques described in research and write-ups about common malware. He even shared the code of a Python-based stealer that seeks out common file types, copies them to a random folder in the Temp folder, ZIPs them, and then uploads them to a hardcoded FTP server.

In the enterprise space, two particular flavors of cyberattack that experts believe ChatGPT will enable are business email compromise (BEC) and phishing emails.

Most standard enterprise email security tools are designed to detect and block suspected BEC and phishing attacks. They’re trained to recognize the commonly used templates, typos, and other verbiage included in such emails. However, as we addressed earlier, ChatGPT is a remarkably fast and accurate content creator, so it could potentially allow attackers to generate more unique and convincing content for each and every email they distribute. This will make these attacks harder to differentiate from legitimate emails.

To illustrate the scale and seriousness of the potential problem, a threat intelligence researcher recently told Forbes that ChatGPT will be a “great tool” for Russian hackers who’re not fluent in English to craft legitimate-looking phishing emails.

It’s not all bad news: cybersecurity researchers could also use ChatGPT for defensive tasks. These include creating usable Yara rules and spotting buffer overflows in code to limit criminal opportunities. YARA rules allow identifying and classifying malicious code behaviors based on binary patterns. We recommend that your programmers, developers, and others do not use these services for writing code for your organization as it was suggested the code created were prone to vulnerabilities.

We at Hitachi Systems Security, Threat Intelligence recommend being extra wary of any emails where you are unsure of the source, do not download any documents or follow a URL unless you’ve validated its origin. Ensure your EDR, your banner, DMARC, SPF, and DKIM are all UpToDate.

As an industry, we can’t afford to rest in the face of this latest cybersecurity threat. Fortunately, security vendors are already stepping up by developing more sophisticated behavioral AI-based tools to detect ChatGPT-generated attacks.

At the time of writing, there are no legislated protections against the criminal use of ChatGPT, but this may change in the future.

In the meantime, be sure to brush up on the basics. Deployment of cloud-based security solutions is non-negotiable as a first line of defense in preventing sophisticated BEC or phishing emails from reaching your end users. This should include inbound and outbound data loss prevention, email encryption, antivirus, and anti-malware.

We also recommend implementing “refresher” security awareness training in which you alert employees to this new breed of threat and its capabilities of delivering even trickier e-mails and other phishing, whaing, smishing and other attacks.